IEEE Big Data 2025: The End of Infrastructure Obsession

By Sabri Skhiri, CTO

For years, the conversation at major data conferences revolved around the "5 Vs" (Volume, Velocity, etc.) and the plumbing required to move bytes from A to B.

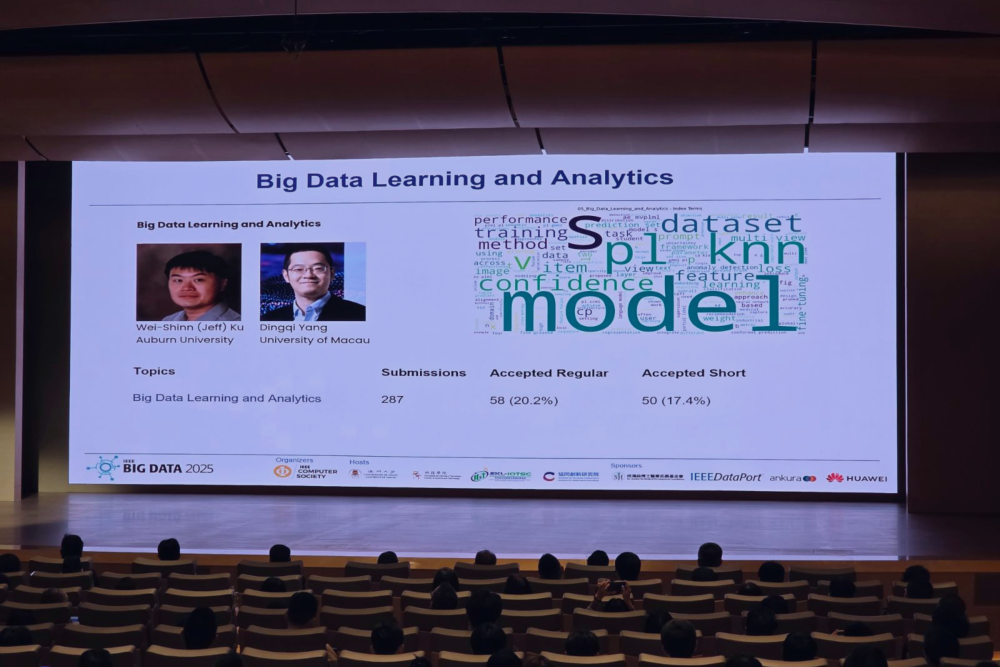

This shift was at the heart of the 2025 IEEE International Conference on Big Data (IEEE BigData 2025). As a premier, top-tier academic conference—now in its 13th edition since 2013—its mission is to provide a global forum for researchers and practitioners to share the most recent results in Big Data research, development, and real-world applications.

At this year's edition, the focus definitively shifted.

Companies are no longer debating how to store petabytes; they are engineering systems to reason over them. For the data leader, this signals a transition from managing infrastructure to deriving complex intelligence. Here is the engineering reality from the floor of IEEE 2025.

The Trends: Consolidation and Grounding

1. Embeddings as the Universal Interface

A clear and dominant trend is the use of embeddings to operationalise domain knowledge. We are not just training on raw data. The field is moving beyond purely data-driven models towards hybrid systems where rule-based logic, expert predicates, and traditional feature engineering are encoded as vector representations. This "embedding everything" approach allows for the seamless integration of structured human knowledge with the pattern recognition power of deep learning, making models more interpretable and grounded in physical or business constraints.

Real-world Application: Consider a fraud detection system. In a hybrid system, we can explicitly encode expert-defined fraud patterns as reference vectors within the latent space. Live transaction sequences are then projected into this same space. The prediction relies on the geometric proximity between the live data and these "expert knowledge" anchors. This allows the system to catch attacks that would bypass strict rule-based filters, effectively digitising the fraud analyst's intuition.

2. Computer Vision is Just a Feature

Computer Vision (CV) has virtually disappeared as a standalone track. This isn't a decline; it's an absorption. CV is now a sub-component of Multimodal AI. We are no longer building "vision systems"; we are building reasoning engines where vision, text, and sensor data interact seamlessly. Research now focuses on interaction patterns, such as using models like CLIP to generate textual descriptors for images that are then used in downstream tasks like Retrieval-Augmented Generation (RAG). Vision has transitioned from an end in itself to a powerful modality that enriches broader reasoning systems.

3. Graphs (GNNs) Are an Essential Structure

Graph Neural Networks (GNNs) have graduated from niche academic interest to the backbone of retrieval, recognized now for their unique capacity to model relationships and complex systems. Their surge in popularity is closely tied to the rise of LLMs, as graphs provide a structured knowledge framework that can ground language models, enhance reasoning, and improve the accuracy of information retrieval. This marks a critical shift in AI: moving from pure statistical correlation towards true relational understanding.

4. Time Series: The Real World is Temporal

Nearly half of the technical sessions focused on time series modelling. This reflects the operational reality of IoT, finance, and logistics. The challenge is no longer handling large volumes of static data, but understanding and predicting complex temporal dynamics. The research showcased advanced applications, including generative models for simulating future states, anomaly detection in evolving systems like dynamic graphs, and sophisticated forecasting.

The Warning: Security is Non-Negotiable

The transition from prototype to production has triggered a security arms race: securing models against data poisoning, evasion attacks, and prompt injection is foundational to trustworthy deployment. We observed researchers using LLMs to autonomously generate adversarial attacks—finding minimal perturbations in text to flip sentiment or bypass guardrails. On the other hand, we see an inversion attack on machine learning models or on LLM to extract private and sensitive information used to pre-train the model.

The takeaway is binary: If your AI architecture does not include a dedicated security layer, it is not production-ready.

The "No Magic" Moment: (RL) Agent Orchestration

The keynote by Sihem Amer-Yahia on "Training and Reusing AI Agents" addressed the engineering overhead of automated data exploration: an incremental, conversational process where users refine their understanding of what they want from a dataset. Historically, specialised Reinforcement Learning (RL) agents were trained for niche data exploration tasks—like identifying galaxy types in astronomy datasets or filtering specific e-commerce products.

(Note: In this context, an RL agent is a specialised "player"—an agent trained via trial-and-error to maximise a specific numerical reward within a defined environment. This is distinct from an LLM Agent, which relies on broad language prediction to plan and reason.)

These agents achieve high optimisation but suffer from a fatal flaw: rigidity. Every new task requires retraining. The proposed way forward is not to replace these specialised agents, but to orchestrate them.

Instead of retraining a monolithic model for every new query, the architecture maintains a library of pre-trained, expert RL agents. When a new composite task arises, the system defines a composite reward function—calculated as a linear combination of the individual reward functions of the selected specialised agents. Combining their capabilities.

This is where the Large Language Model (LLM) enters, not as a generator, but as a planner. The LLM's strength in sequential reasoning and task decomposition makes it a natural candidate to decide which specialised agents to call and how to combine their outputs for a new user query. The LLM's role is to decompose the user's high-level intent into a sequence of calls to these specific agents.

However, this only works with rigorous "Context Engineering." The speaker emphasised that success depends on providing the LLM with a structured semantic map containing:

- RL Agent Capabilities: Precisely what each agent in the library can and cannot do, and what its purpose is.

- Data Structure: The structure and semantics of the target datasets.

- Session Goals: The specific boundaries of the user's exploration.

This curated context enables the LLM to function not just as a text generator, but as a knowledgeable planner that can map a user's natural language intent to a sequence of pre-existing data exploration capabilities. This moves the complexity from prompting (guessing magic words) to architecting (defining clear system constraints).

The Convergence Question

During the Q&A, my curiosity was fully piqued, and I couldn't resist asking the "uncomfortable" engineering question: In these orchestrated agent systems, is convergence—and more importantly, convergence to a global optimum—mathematically guaranteed?

The answer was a flat "No."

We are building systems that must embrace probabilistic outcomes and inherent uncertainty. I found this honest answer quite interesting, though personally, I feel it might be too optimistic. The lack of guaranteed convergence isn't just about potentially landing in a sub-optimal state; it introduces the risk of the system failing more dramatically—essentially, falling short of what it's supposed to achieve. For the enterprise, this is the critical constraint. We cannot promise deterministic perfection in agentic workflows; we can only engineer for robust probability.

The Verdict: The "Big Data" era of sheer volume is over. The era of secure, grounded, and probabilistic engineering has begun.